Proof-of-Archival-Storage

Public Parameters and Values

Protocol Constants

These parameters are fixed at the beginning of the protocol and used by all clients.

-

SLOT_PROBABILITY: the probability of successful block in a slot (active slots coefficient), currently 1/6. This defines the expected block production rate of 1 block every 6 seconds -

INITIAL_SOLUTION_RANGE: solution range for proof-of-replication challenge for the first era -

ERA_DURATION_IN_BLOCKS: solution range is adjusted after this many blocks, currently 2016 (as in Bitcoin) -

KZG_PUBLIC_PARAMETERS: universal reference values for KZG Polynomial Commitment scheme obtained through an MPC ceremony -

KZG_MAX_DEGREEis : the maximum degree of the polynomial possible to commit to under the KZG scheme -

CONFIRMATION_DEPTH_K: minimal depth after which a block can enter the recorded history (a global constant, as opposed to the client-dependent transaction confirmation depth), currently 100 -

EXPECTED_VOTES_PER_BLOCK: number of votes expected per block, currently 9 -

SAFE_BYTESis 31 bytes: the size into which raw history data is partitioned before Archiving for KZG commitment, required to ensure that values fit the size of the point subgroup of BLS12-381 curve -

FULL_BYTESis 32 bytes: the size into which encoded data is partitioned after Archiving -

NUM_CHUNKSis : number of KZG field elements that compose a record,NUM_CHUNKS≤KZG_MAX_DEGREE. -

NUM_S_BUCKETS: number of s-buckets in a record (and by extension in plot sector), currently (NUM_CHUNKS / ERASURE_CODING_RATE) -

RAW_RECORD_SIZEis 1 MiB: the size of a record of raw blockchain history before Archiving to be transformed into a piece (SAFE_BYTES * NUM_CHUNKS = 1015808 b) -

NUM_RAW_RECORDSis 128: the number of raw records in a Recorded History Segment -

RECORDED_HISTORY_SEGMENT_SIZEis roughly 128 MiB: size of raw recorded history segment ready for Archiving (RAW_RECORD_SIZE * NUM_RAW_RECORDS) -

RECORD_SIZEis 1 MiB: the size of useful data in one piece (FULL_BYTES * NUM_CHUNKS = 1048576 b) -

WITNESS_SIZEis 48 bytes: the size of KZG witness -

COMMITMENT_SIZEis 48 bytes: the size of KZG commitment -

PIECE_SIZEis 1 MiB: a piece of blockchain history (RECORD_SIZE + COMMITMENT_SIZE + WITNESS_SIZE = 1048672 b)In a piece, the chunks should be full 32 bytes, because after performing field operations (i.e. erasure coding) the resulting field elements are not guaranteed to fit into 31 bytes anymore.

-

ERASURE_CODING_RATE: parameter of erasure code operation, specifies what portion of data is enough to recover it fully in case of loss, currently 1/2 -

NUM_PIECESis 256: the number of pieces in an Archived History Segment after erasure coding (NUM_RAW_RECORDS/ERASURE_CODING_RATE) -

K: space parameter (memory hardness) for proof-of-space, currently 20 -

RECENT_SEGMENTS: number of latest archived segments that are considered Recent History for the purposes of Plotting (currently 3) -

RECENT_HISTORY_FRACTION: fraction of recently archived pieces from theRECENT_SEGMENTSin each sector (currently 1/10) -

MIN_SECTOR_LIFETIME: minimum lifetime of a plotted sector, measured in archived segments, currently 4

Dynamic Blockchain Values

These parameters are derived from the blockchain and are dynamically updated over time.

slot_number: current slot number, derived since genesis from the Proof-of-Time chainsolution_range: farming difficulty parameter, dynamically adjusted to control the block generation ratevoting_solution_range: voting difficulty parameter, dynamically adjusted to control the vote generation rate. This range is larger thansolution_rangeto allow for multiple votes per block (solution_range * (EXPECTED_VOTES_PER_BLOCK+1))global_randomness: used to derive slot challenges, from the Proof-of-Time chainpieces[]: all pieces of the recorded blockchain historyhistory_size: number of archived segments of the blockchain history until this momentsegment_commitments[]: hash map of KZG commitments computed over pieces in a segment and stored in the runtimeBlockList: list of public keys that committed offenses

Consensus Parameters

These parameters are fixed at the beginning of the protocol and used by all clients, however they can be updated if necessary.

max_pieces_in_sector: number of pieces to be retrieved from blockchain history by a farmer under Verifiable Sector Construction (VSC) and encoded in a single sector by an honest farmer, currently 1000sector_size: size of a plotted sector on disk, currently ~1 GiB (max_pieces_in_sector * RECORD_SIZE)

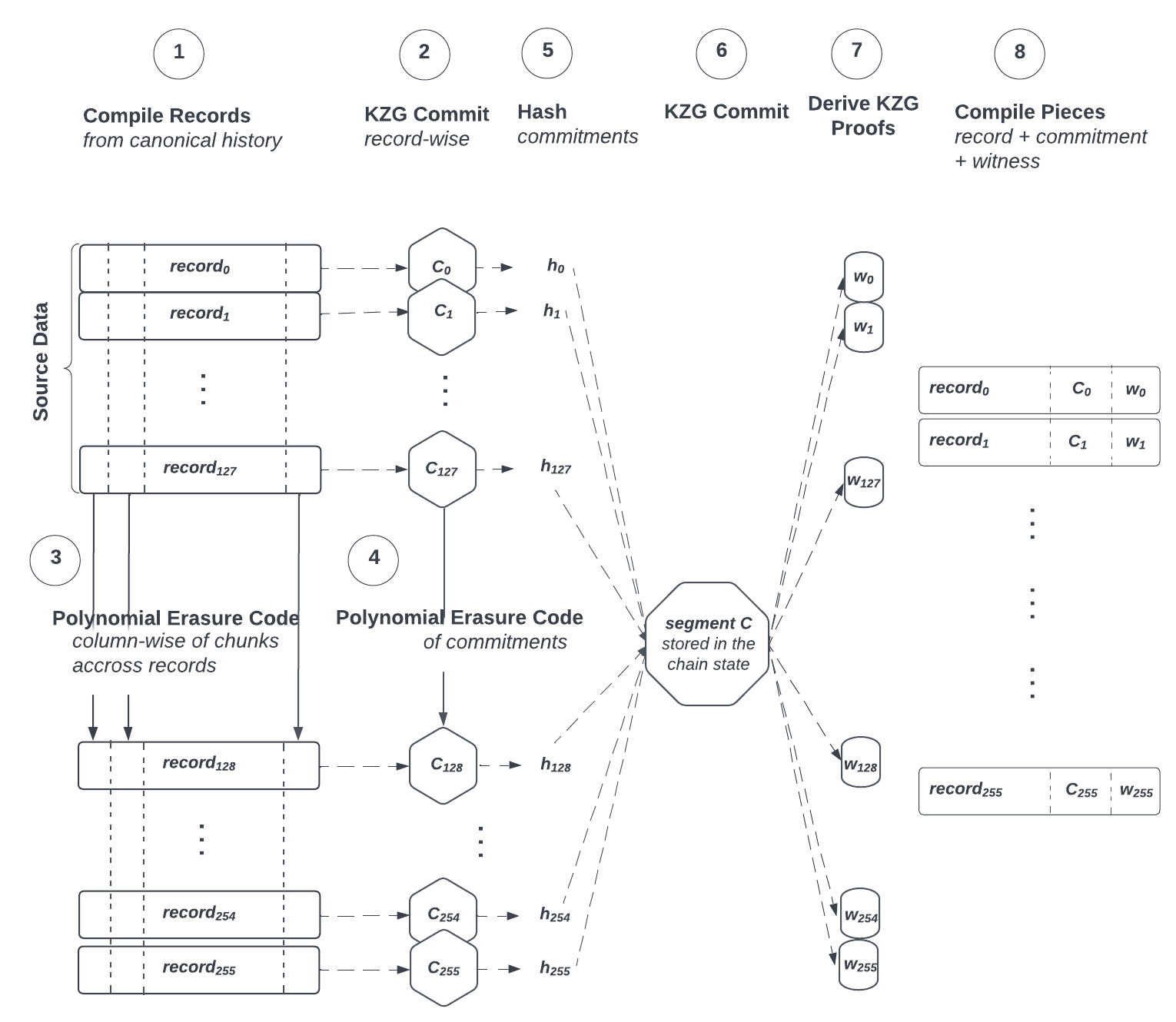

Archiving

Preparing the history

-

The genesis block is archived as soon as it produced. We extend the encoding of the genesis block with extra pseudorandom data up to

RECORDED_HISTORY_SEGMENT_SIZE, such that the very first archived segment can be produced right away, bootstrapping the farming process. This extra data is added to the end of the SCALE-encoded block, so during decoding of the genesis block it'll be ignored. -

Once any block produced after genesis becomes

CONFIRMATION_DEPTH_K-deep, it is included in the recorded history. Recorded history is added to a buffer of capacityRECORDED_HISTORY_SEGMENT_SIZE. -

When added to the buffer, blocks are turned into

SegmentItems. -

Each segment will have parent

segment_headerincluded as the first item. Eachsegment_headerincludes hash of the previous segment header and the segment commitment of the previous segment. Together segment headers form a chain that is used for quick and efficient verification that somepiececorresponds to the actual archival history of the blockchain. -

Segment items are combined into segments. Each segment contains at least two of the following

SegmentItems, in this order:- The previous segment’s

SegmentHeader BlockContinuation, if remained from the previous segmentBlock(s), as many as fit fully into the current segment, may be noneBlockStart, if the next block doesn't fit within the current segmentPadding(zero or more) in case padding bytes are necessary to complete the segment toRECORDED_HISTORY_SEGMENT_SIZE

- The previous segment’s

-

When the buffer (after SCALE-encode) contains enough data to fill a record of

RAW_RECORD_SIZEbytes, it is archived:- Split the record into = =

NUM_CHUNKSchunks of sizeSAFE_BYTES. - Interpolate a

polynomialover the chunks of the record withpoly(record). - Commit to the record polynomial under KZG and obtain the source

record_commitmentasCommit(polynomial). This will allow us to later compute proofs that a chunk belongs to a particular record.

- Split the record into = =

-

Once

NUM_RAW_RECORDS(128) records have been committed, stack them into a matrix ofNUM_RAW_RECORDSrows and columns. Each row is a record. -

Erasure code records column-wise with

extend(column, ERASURE_CODING_RATE)This effectively doubles the number of rows and thus, records per segment to

NUM_PIECES(256). -

Erasure code commitments with

extend(record_commitments, ERASURE_CODING_RATE).By doing this step we can effectively save almost half of the record commitment time in archival. Instead of directly committing to parity records we erasure code the commitments here, which should match the parity record commitments, but being much faster to compute.

-

Hash each

record_commitment, both source and parity, into 254-bit scalarrecord_commitment_hashes values .Commitments are hashed to 254 bits (we hash it as usual and set last 2 bits to 0) to bring them back down to field elements so it becomes possible to KZG commit to them (at cost of losing homomorphic properties). There is no direct way to commit to 48-byte KZG commitments without greatly increasing proof size and computation time (i.e. by using IPP proofs). Committing to the hashes effectively makes a one level Verkle tree.

-

Interpolate a polynomial

segment_polynomialover these hashes and commit to it under KZG to get thesegment_commitmentascommit(Poly(record_commitment_hashes)). -

For each

record_commitment_hashes[i], compute arecord_witnessto thesegment_commitmentascreate_witness(segment_polynomial, i).This will allow us to prove that a record belongs to an archived history segment without having to provide all other segment commitments at a cost of storing additional 48 bytes per piece.

-

For each record, form a

piece = record || record_commitment || record_witness -

Append the

segment_commitmentto the globalsegment_commitments[]table of the chain. -

The segment now consists of

NUM_PIECESrecords of 1MiB each,NUM_PIECESpiece commitments,NUM_PIECESproofs of 48 bytes each and one 48-bytesegment_commitment.The pieces with even indices ((0, 2, …, 254) of this segment) correspond to source records and the pieces with odd indices ((1, 3, …, 255)of this segment) correspond to parity records. The blockchain history data is effectively contained only in pieces with an even

piece_index.

Plotting

Pre-plotting Initialization

Determining and retrieving the assigned portion of the history

-

Given the total allocated disk space, reserve some space () for commitments and other auxiliary information.

-

Create a single plot of

plot_size = allocated_plotting_space. Sizes of farmer plots are independent from size of blockchain history, but must be a multiple ofsector_size. -

Generate a farmer identity, that is a key pair

public_key, secret_keyunder the digital signature scheme. These signing keys are independent from reward address of an entity (exactly as payout address in Bitcoin mining) described in Block reward address. -

Derive an identifier

public_key_hashashash(public_key). This farmer id will also serve as the basis for their single global network peer id in the DHT. -

Determine the

sector_count = plot_size / sector_size. -

Verifiable Sector Construction (VSC):

Determine which pieces are to be downloaded for this sector:

- Index the sectors sequentially and for each sector derive

sector_id = keyed_hash(public_key_hash, sector_index || history_size), wheresector_indexis the sector index in the plot andhistory_sizeis the current history size at the time of sector creation. - For each sector, for each

piece_offsetin0..max_pieces_in_sector, derive thepiece_indexin global blockchain history this slot will contain, as follows:- At the start of the chain, if

history_size <= RECENT_SEGMENTS / RECENT_HISTORY_FRACTIONthe pieces for this sector are selected uniformly aspiece_index = keyed_hash(piece_offset, sector_id) mod (history_size * NUM_PIECES)forpiece_offsetin0..max_pieces_in_sector - Later, when history grows (

history_size > RECENT_SEGMENTS / RECENT_HISTORY_FRACTION) to make sure recent archived history is plotted on farmer storage as soon as possible we selectRECENT_HISTORY_FRACTIONof pieces for each sector from the lastRECENT_SEGMENTSarchived segments. - For

piece_offsetin0..max_pieces_in_sector * RECENT_HISTORY_FRACTION * 2:- If

piece_offsetis odd, select a piece from recent history aspiece_index = keyed_hash(piece_offset, sector_id) mod (RECENT_SEGMENTS * NUM_PIECES) + ((history_size - RECENT_SEGMENTS) * NUM_PIECES) - If

piece_offsetis even, select a piece uniformly from all history aspiece_index = keyed_hash(piece_offset, sector_id) mod (history_size * NUM_PIECES)

- If

- The rest of the pieces for this sector are selected uniformly as

piece_index = keyed_hash(piece_offset, sector_id) mod (history_size * NUM_PIECES)forpiece_offsetin(2 * RECENT_HISTORY_FRACTION * max_pieces_in_sector)..max_pieces_in_sector

- At the start of the chain, if

- Retain the

history_sizecount at the time of sector creation. This sector will expire at a point in the future as described in Sector Expiration.

- Index the sectors sequentially and for each sector derive

-

Retrieve each piece from the Distributed Storage Network (DSN) and verify against segment commitment obtained from the node.

- For each synced sector, proceed to Plotting phase.

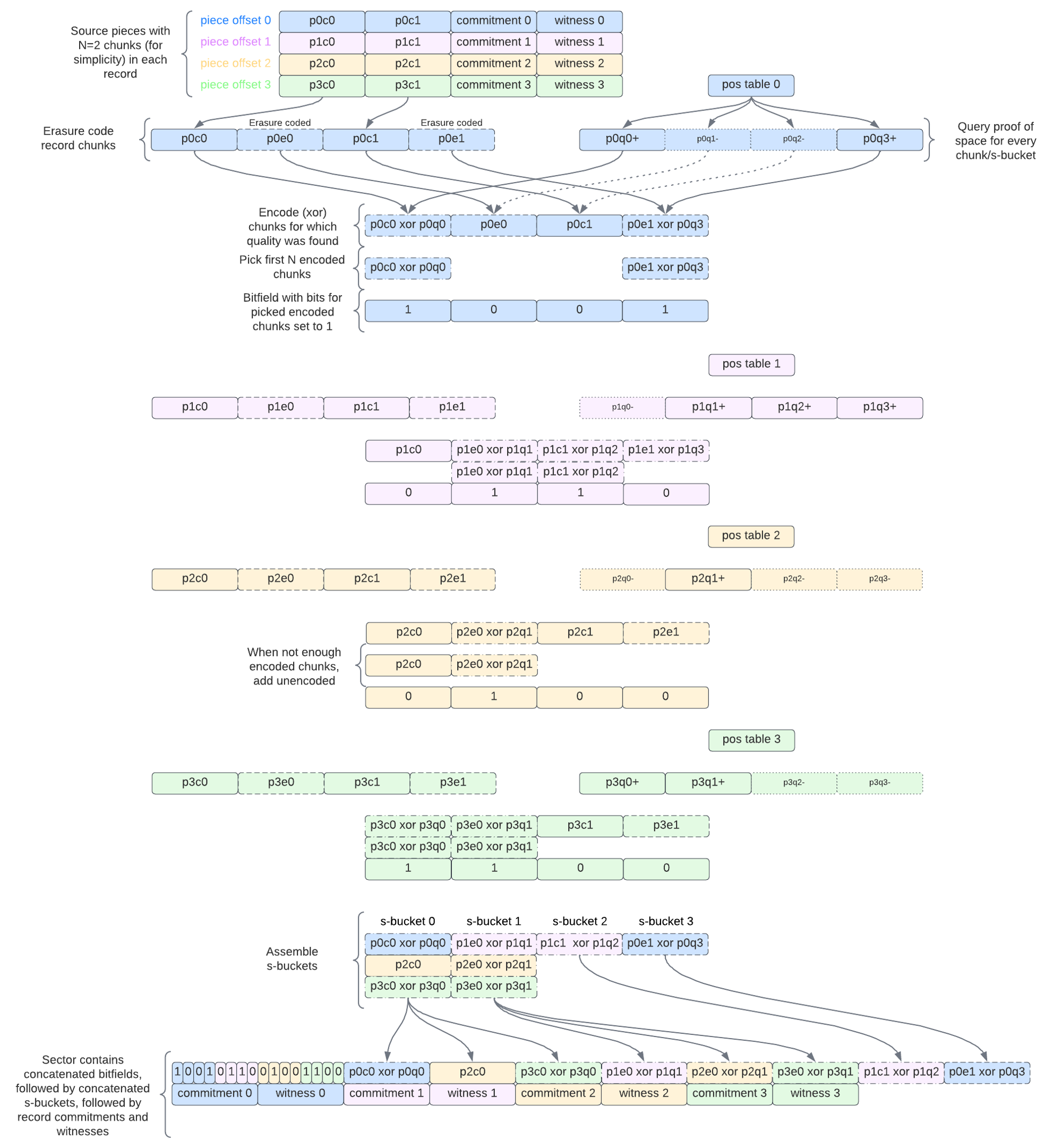

Plotting to Disk

Sealing the history

For each sector, given that all pieces assigned to the sector reside locally in memory, encode pieces row-wise (piece by piece or one piece at a time).

For each piece in the sector:

-

Extract the bundled record from the piece and retain the

record_witnessandrecord_commitmentalongside the plot. -

Split the extracted record into

FULL_BYTES-sizedrecord_chunks, which are guaranteed to contain values that fit into the scalar field. -

Erasure code the record with

extended_chunks = extend(record_chunks, ERASURE_CODING_RATE)- (Optimization, Implemented) It is faster to do FFT over domain with

extendand throw away all values that are not inproven_indicesthen evaluate at proven indices. FFT complexity is expected at while direct evaluation is

- (Optimization, Implemented) It is faster to do FFT over domain with

-

Derive an

evaluation_seedfromsector_idandpiece_offsetwithin this sector asevaluation_seed = hash(sector_id || piece_offset) -

Derive a Chia proof-of-space table

pos_table = generate(K, evaluation_seed) -

Initialize

num_successfully_encoded_chunks = 0to indicate how many chunks were encoded so far. -

Iterate through each

(s_bucket, chunk)inextended_chunks:- Query the

pos_tablefor a valid proof-of-spaceproof_of_spacefor this chunk asfind_proof(pos_table, s_bucket). - If it exists, encode the current chunk as

encode(chunk, hash(proof_of_space))and increasenum_successfully_encoded_chunksby 1. Otherwise, continue to the next chunk. - Place the encoded chunk into a corresponding s-bucket for given index

s_bucketand set corresponding bit in theencoded_chunks_usedbitfield to1.

There is one

encoded_chunks_usedbitfield per record which indicates which s-buckets contain its encoded chunks. - Query the

-

If

num_successfully_encoded_chunks >= NUM_CHUNKSall chunks were encoded successfully, take the necessaryNUM_CHUNKSand proceed to the next record. -

Else, if

num_successfully_encoded_chunks < NUM_CHUNKS, select extra chunks to store at the end after encoded chunks:- Compute

num_unproven = NUM_CHUNKS - num_successfully_encoded_chunks - Save as

extra_chunksthe firstnum_unprovenchunks of the source record corresponding to the indices whereencoded_chunks_usedis not set.

Testing with k=17 showed that the event when

pos_tabledid not contain proofs within possible bucket indices, and thusencoded_chunks_usedhas less than bits set, happened for ~0.8% records we should retain necessary amount of source chunks for the record to be recoverable and provable. These extra chunks cannot participate in farming though. - Compute

-

Once all records are encoded, write the sector to disk, one s-bucket at time in order of the index, each followed by

encoded_chunks_usedbitfield corresponding to selected chunks. -

The final plotted sector consists of

max_pieces_in_sectormanyencoded_chunks_usedbitfields of sizeNUM_S_BUCKETSeach,NUM_S_BUCKETSs-buckets and commitments and witnesses of pieces in this sector.Each

encoded_chunks_usedindicator vector has bit set to1at places corresponding to the record positions whose chunks are encoded in this s-bucket and are eligible for farming.

- Plotting consists of transforming raw sectors into encoded sectors, which can be viewed as converting a row-wise matrix of raw records into a row-wise matrix of encoded records and viewing that as a column-wise matrix of s-buckets.

- Sectors are written to disk in a manner that is optimized for sector audits (as opposed to efficient recovery of pieces).

Sector Expiration

After MIN_SECTOR_LIFETIME segments were archived (since sector creation), the farmer should check whether the sector have expired and can no longer be farmed based on the “age” (history size at plotting) of each sector.

For each sector sector_id and history_size when this sector was plotted:

- When current history size of the chain reaches

sector_expiration_check_history_size = history_size + MIN_SECTOR_LIFETIMEfarmer should check when to expire this sector based on correspondingsegment_commitmentof last archived segment. - Compute

sector_max_lifetime = MIN_SECTOR_LIFETIME + 4 * history_size. With this limitation on sector lifetime, a sector with expire with probability ~50% by the time history doubles since it’s initial plotting point and is guaranteed to expire by the time history doubles again. - Compute

expiration_history_size = hash(sector_id || segment_commitment) mod (sector_max_lifetime - sector_expiration_check_history_size) + sector_expiration_check_history_size - When the current history size reaches

expiration_history_size, expire this sector. - Replot the sector for new history size as described in Plotting.

Farming

Auditing the history

Challenge Generation

- For each

slot_number, compute theglobal_challengeashash(global_randomness||slot_number). Theslot_numberandglobal_randomnessare derived from PoT (see derive_global_randomness). - Compute the

sector_slot_challenge = global_challenge XOR sector_id - Compute the

s_bucket_audit_index = sector_slot_challenge mod NUM_S_BUCKETS

Audit

- Read the corresponding s-bucket at

s_bucket_audit_indexfrom disk into memory. - For each

chunkin the bucket, computeaudit_chunk = keyed_hash(sector_slot_challenge, chunk), truncated to 8 bytes - Check if the result (as integer) falls within +/-

voting_solution_range/2of theglobal_challenge - Sort all the potentially winning

audit_chunksby their “quality” - the closest toglobal_challengefirst. - Prove the best chunk and submit the solution.

Proving

-

For a winning

audit_chunk(8 bytes), derive thechunk_offsetfrom the parent full chunk’s position in the s-bucket. -

Get the full 32 byte

chunkat thatchunk_offset. -

From

encoded_chunks_usedbitfields determine if it is an encoded chunk. -

If not, chunk is not eligible for proving.

-

If yes, then determine parent record

piece_offsetof thischunk. -

Compute the steps 1-7 in Recovering the Record and obtain

plotted_chunks -

Save the

proof_of_spacefor thischunkused for decoding -

Recover the

extended_chunks_polyusing decoded chunks withrecover_poly(plotted_chunks). -

Retrieve the

record_commitment. -

Compute the

chunk_witnessfor the decoded winningchunkthat ties back to therecord_commitmentascreate_witness(extended_chunks_poly, s_bucket_audit_index), and attach both to the solution for verification against the original record commitment stored in the history. -

Retrieve the

record_witnessthat ties back to thesegment_commitment. -

Produce a

solutionconsisting ofstruct Solution {

public_key: 32 bytes

reward_address: 32 bytes

sector_index: 2 bytes

history_size: 8 bytes

piece_offset: 2 bytes

record_commitment: 48 bytes

record_witness: 48 bytes

chunk: 32 bytes

chunk_witness: 48 bytes

proof_of_space: 160 bytes

}

Submitting a solution

If the winning audit_chunk is within solution_range:

- Attach

solutionto block header. - Forge a new

block(as defined in Substrate framework). - Seal the

blockcontent withsign(secret_key, block).

Otherwise, submit a vote extrinsic with solution.

Verification

Ensuring a solution comes from a valid plot

- If the

public_keyof the block's farmer is in the block list, ignore the block. - Verify that the consensus log in the block header is correct. This includes

solution_rangeandglobal_randomness. - Verify that PoT items in the header are correct according to New Blocks

- Verify that

solution_rangeandglobal_randomnessare correct for theslot_numberof the block. - Compute the

global_challenge = hash(global_randomness||slot_number). - Verify that current chain history size in segments is greater than winning

piece_index / NUM_PIECES. - Verify that

piece_offset ≤ max_pieces_in_sector - Re-derive

sector_id- Compute

public_key_hash = hash(public_key) - Re-derive the

sector_id = keyed_hash(public_key_hash, sector_index || history_size)

- Compute

- Verify that the

sector_idsubmitted has not expired:- Compute

sector_expiration_check_history_size = history_size + MIN_SECTOR_LIFETIMEandsector_max_lifetime = MIN_SECTOR_LIFETIME + 4 * history_size. - Take the archived segment

segment_commitmentatsector_expiration_check_history_size. - Compute

expiration_history_size = hash(sector_id||segment_commitment) mod (sector_max_lifetime - sector_expiration_check_history_size) + sector_expiration_check_history_size - Check that

expiration_history_sizeis smaller than current history size of the chain.

- Compute

- Re-derive the

sector_slot_challenge = global_challenge XOR sector_id - Re-derive the

s_bucket_audit_index = sector_slot_challenge mod NUM_S_BUCKETS - Re-derive the

evaluation_seedfor the record from thesector_idandpiece_offsetashash(sector_id || piece_offset) - Verify

proof_of_spacewithIs_Proof_Valid(K, evaluation_seed, s_bucket_audit_index, proof_of_space) - Ensure the

chunksatisfies the challenge criteria:- Compute the

masked_chunkasEncode(chunk, hash(proof_of_space)) - Compute the keyed hash

keyed_hash(sector_slot_challenge, masked_chunk)truncated toaudit_chunksize - Ensure the result falls within +/-

solution_range/2of theglobal_challenge

- Compute the

- Ensure the provided

chunkwas correctly extended from a history piece for the assigned record commitment:- Verify the

chunk_witnessfor the givenchunk,record_commitment, ands_bucket_audit_index

- Verify the

- Ensure the encoded record belongs to the assigned slot and that record also belongs to the history:

- Re-derive the

piece_indexfrom thepiece_offsetandhistory_sizethe same way as during pre-plotting (as described in Verifiable Sector Construction) - Retrieve the

segment_commitmentof the segment that contains the assignedpiece_index - Hash the

record_commitmentto obtain therecord_commitment_hash - Verify the

record_witnessfor thepiece_index,record_commitment_hashandsegment_commitment

- Re-derive the

- Ensure the farmer did not outsource solving to a third-party without revealing their private keys by verifying the

farmer_signaturewith thepublic_keyandchunk. - Verify the signature on the block content.

- If another block signed with the solution with same solution (

public_key,sector_index,piece_offset,chunk,chunk_audit_indexandslot_number) had already been received, report an equivocation bypublic_keyand ignore the block.

The above steps assume standard block and transaction verification.

Extraction

Serving data from the plot

- When requested a piece at

piece_indexidentifysector_indexandpiece_offsetwhere that piece is stored. - For the record plotted at

piece_offsetin that sector perform record recovery and compose the piece.

Recovering the Record

- From the record’s

encoded_chunks_usedbitfield determine which s-buckets contain chunks of this record. - Read all the

plotted_chunksof the parent record from their respective s-buckets. Set the encoded chunk valueNonein places whereencoded_chunks_usedis0. Total length ofplotted_chunksshould beNUM_CHUNKS/ERASURE_CODING_RATE. - Derive the

evaluation_seedfor the record fromsector_idandpiece_offsetwithin this sector asevaluation_seed = hash(sector_id || piece_offset) - Derive a Chia proof-of-space table

pos_table = generate(K, evaluation_seed) - Iterate through each

(s_bucket, plotted_chunk)inplotted_chunks:- If

plotted_chunkisNone, continue to next chunk. - Else, query the

pos_tablefor a valid proof-of-spaceproof_of_spacefor this chunk asfind_proof(pos_table, s_bucket). The proof should exist if the chunk was encoded and plotted. - Decode the chunk in-place with

decode(plotted_chunk, hash(proof_of_space)).

- If

- If the number of

plotted_chunksis less thenNUM_CHUNKS, we need extra chunks to recover the record. Insert eachextra_chunkin places ofNoneinplotted_chunksfrom the beginning, however many extra chunks there are. - Recover the source

recordfrom the decoded chunks withrecover(plotted_chunks)).

Composing the Piece

- Retrieve the

record_commitmentandrecord_witnessfrom the sector table. - Return the recovered piece as

record || record_commitment || record_witness.

Randomness Updates

Global randomness is updated every slot with the output of Proof-of-Time chain for that slot. See Proof-of-Time Specification.

Solution Range Updates

Initial solution range INITIAL_SOLUTION_RANGE is computed based on a single plotted sector with the goal of getting a single winning chunk in each slot with probability SLOT_PROBABILITY, as follows:

Let num_audit_chunks be the number of tickets read during single slot audit in a sector. For each piece (max_pieces_in_sector), we audit a single full chunk in a single s-bucket. The probability of s-bucket containing a full chunk at that position is NUM_CHUNKS/NUM_S_BUCKETS (1/2). Thus, a farmer has this many tickets at each slot:

To compute initial solution range for one sector, maximum possible solution range (U64::MAX) is divided by num_chunks and multiplied by slot probability:

INITIAL_SOLUTION_RANGE becomes solution_range for the first era.

Global solution_range is adjusted every era accordingly to actual and expected blocks produced per era to keep block production at the same pace while space pledged on the network change:

- After every

ERA_DURATION_IN_BLOCKSnumber of blocks, a new era begins. - At the start of a new era, compute and store new

solution_range

.

Conversion Rate Between Solution Range and Space Pledged

The relationship between the solution range and the amount of space pledged is dynamically calculated using the function solution_range_to_sectors. This function computes the number of sectors corresponding to a given solution range by adjusting for slot probability and the configuration of data within sectors. Specifically, the maximum possible solution range (SolutionRange::MAX) is first reduced according to the slot probability, which reflects the desired frequency of successful block production. This is further adjusted by the distribution of data, particularly the ratio of the number of chunks per s-bucket in each sector (MAX_PIECES_IN_SECTOR * Record::NUM_CHUNKS / Record::NUM_S_BUCKETS), to account for the probability of an occupied s-bucket being successfully audited in the verification process. The resulting figure is then divided by the current solution range to determine the total number of sectors that this solution range can effectively cover.

const fn solution_range_to_sectors(solution_range: SolutionRange) -> u64 {

let sectors = SolutionRange::MAX

// Account for slot probability

/ SLOT_PROBABILITY.1 * SLOT_PROBABILITY.0

// Now take sector size and probability of hitting occupied s-bucket in sector into account

/ (MAX_PIECES_IN_SECTOR as u64 * Record::NUM_CHUNKS as u64 / Record::NUM_S_BUCKETS as u64);

// Take solution range into account

sectors / solution_range

}